How GIPHY’s Public API Integrates with gRPC Services

August 13, 2020 by

We always work hard at GIPHY to help people find the right GIF at the right time. Adoption of gRPC helps us continue to keep our services fast, stable, and fault-tolerant as we scale to over 10 billion pieces of content a day.

When the GIPHY API, which serves content to our third party integrations, was initially developed in 2013, it was monolithic. In 2017, we began splitting it into micro-services to help scale and maintain our service.

In the first stage of this separation, the integration between the original PHP app and the new micro-services was done via HTTP. In 2019, we started moving to gRPC. With limited resources and a highly scaled application, the process of integrating gRPC in PHP was a challenging task.

In this post, we’ll share our learnings from the process so that your integration will be a bit less tricky.

Step 1: generate the client.

gRPC comes with a great tool, protoc, that helps with code generation. All you need is to define your service using proto syntax:

syntax = 'proto3';

package gif.proto;

service Gif {

rpc getGif(GetGifRequest) returns (GetGifResponse) {}

}

message GetGifRequest {

string id = 1;

Options options = 2;

}

message GifGifResponse {

string id = 1;

string title = 2;

}

protoc will take care of everything else:

// GENERATED CODE -- DO NOT EDIT!

namespace Gif\Proto;

class GifService extends \Grpc\BaseStub

{

/**

* @param string $hostname hostname

* @param array $opts channel options

* @param \Grpc\Channel $channel (optional) re-use channel object

*/

public function __construct($hostname, $opts, $channel = null)

{

parent::__construct($hostname, $opts, $channel);

}

/**

* @param \Gif\Proto\GetGifRequest $argument input argument

* @param array $metadata metadata

* @param array $options call options

*/

public function getGif(\Gif\Proto\GetGifRequest $argument, $metadata = [], $options = [])

{

return $this->_simpleRequest('/gif.proto.Gif/getGif', $argument, ['\Gif\Proto\GetGifResponse', 'decode'], $metadata, $options);

}

}

To automate this process we setup Jenkins to generate a composer package with all the clients we need in the API, so when definitions change we just need to update the package version.

Step 2: HTTP to gRPC switch

GIPHY’s API is a very high-load service and mistakes are very expensive. The first thing we had to think about was achieving an easy and fast switch between HTTP and gRPC services, so we could easily canary the service and roll back if we had any trouble.

We added an internal “throttling strategy” for requests distribution:

class GifService

{

/** @var GifClientInterface */

private $client;

public function getClient()

{

if ($condition) {

return $this->grpcClient;

}

return $this->httpClient;

}

public function getGif(string $id): ?Gif

{

return $this->getClient()->getGif($id);

}

}

$condition represents the percentage of requests we want to send to a particular service. Depending on our needs, it could be stored either as Redis key or environment variable.

But simple solutions are not always easy to implement. Incompatible interfaces, like this, were a common situation for us:

class GifHttp

{

public function getGif(string $id, Params $metadataParams, array $options = []): ?Gif

{

}

}

// GifServiceGRPC here

class GifGRPC

{

public function getGif(string $id, array $fetchOptions = [], bool $forceReadFromMaster = false): ?Gif

{

}

}

Because this was a frequent issue, we introduced a

RequestOptionsInterface interface to resolve incompatibilities:

interface RequestOptionsInterface

{

public function getHttpOpts(): array;

public function getGrpcOpts(): array;

}

interface GifClientInterface

{

public function getGif(string $id, RequestOptionsInterface $requestOptions): ?Gif;

}

class RequestOptions implements RequestOptionsInterface

{

public function getHttpOpts(): array

{

return ['http-param' => 'value']; // http-call specific args

}

public function getGrpcOpts(): array

{

return ['grpc-param' => 'value']; // grpc-call specific args

}

}

class GifHttp implements GifClientInterface

{

public function getGif($string $id, RequestOptionsInterface $requestOptions): ?Gif

{

$options = $requestOptions->getHttpOpts();

...

}

}

class GifGRPC implements GifClientInterface

{

public function getGif(string $id, RequestOptionsInterface $requestOptions): ?Gif

{

$options = $requestOptions->getGrpcOpts();

...

}

}

class GifService

{

/** @var GifClientInterface */

private $client;

public function getGif(string $id): ?Gif

{

$opts = new RequestOpts();

// fill in $opts depending on a client;

return $this->getClient()->getGif($id, $opts);

}

}

As a result, we were able to define a common interface and make client-specific calls.

Error handling

Generated clients only have request logic, so we had to implement our own error handling. We use Guzzle as our HTTP client, so we wanted something similar to minimize changes to service layers.

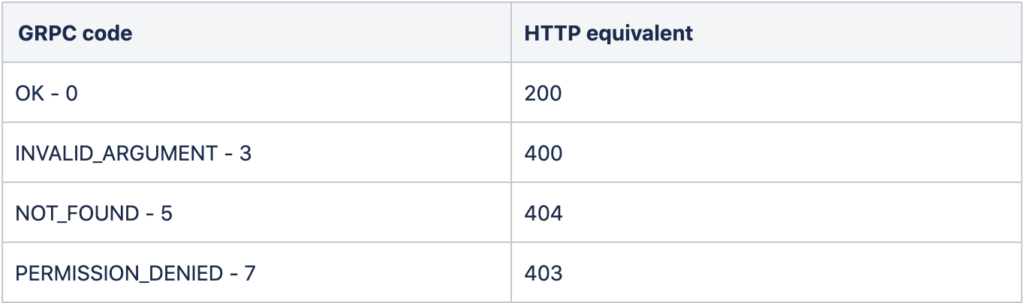

The gRPC error model is based on status codes, so it was easy to map them to HTTP codes. For example:

We found Guzzle’s handlers and middlewares were well documented, so we reused this concept not only for error handling but also for tracing (e.g., retrying requests, etc.). In general, we have a chain of middlewares:

class ErrorMiddleware

{

public function __invoke(): array

{

[$reply, $status] = ($this->next)();

... // detect, log, raise error

throw new $exception($message, $code);

}

}

class GrpcService

{

public function call(string $method, ...$arguments)

{

$callStack = function () use ($method, $arguments) {

return $this->client->{$method}(...$arguments)->wait();

};

$callStack = new RetryMiddleware($callStack, $metadata);

$callStack = new ErrorMiddleware($callStack, $metadata);

$callStack = new OpentracingMiddleware($callStack, $metadata);

[$reply, $status] = $callStack();

return $reply;

}

}

Benefits:

- simple

- pluggable

- testable

Testing

Prior to transitioning to gRPC, there were already many existing acceptance tests in API. We utilized PHPUnit TestListener interface and custom @throttle annotation to manage our throttling strategy and run them over new service:

class ThrottleAnnotationListener implements TestListener

{

public function startTest(Test $test)

{

$annotations = $test->getAnnotations();

... //get throttle annotation value

$this->setSwitch($key, $value);

}

public function endTest(Test $test, $time)

{

// revert switch value

}

}

// Test

class ServiceTest extends TestCase

{

public function testSomething()

{

...

}

/**

* @throttle switch-grpc=1

*/

public function testSomethingWithSwitch()

{

$this->testSomething();

}

}

Step 3: rollout and results

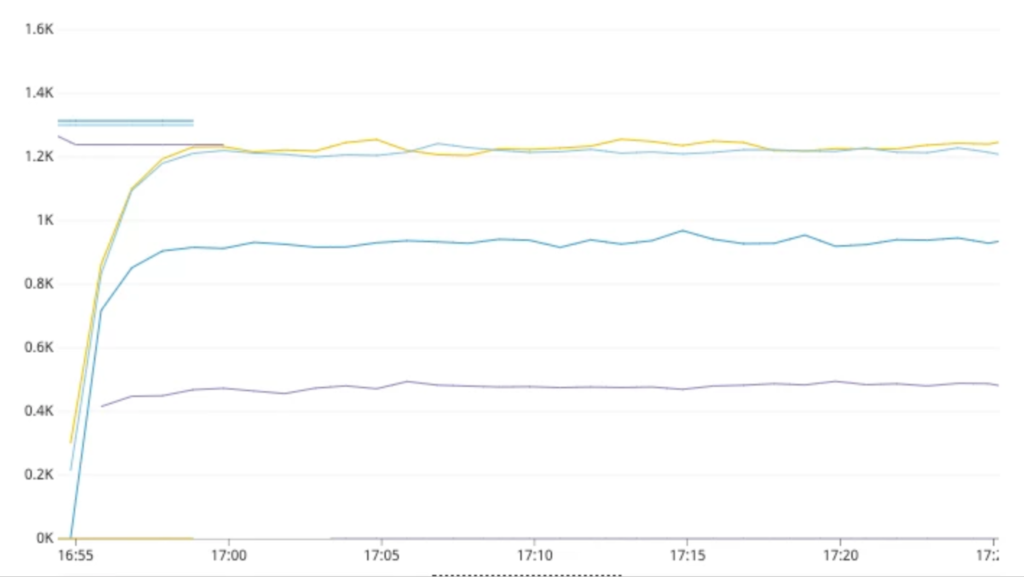

The first thing we noticed was how stable communication to gRPC service is. Connection errors were gone after the switch:

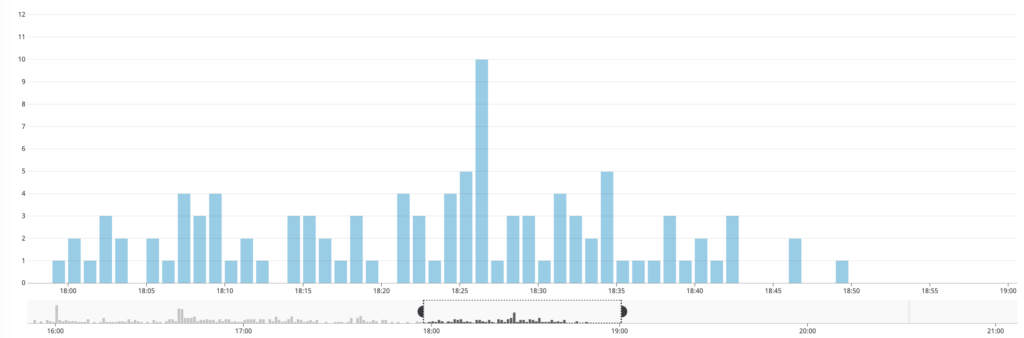

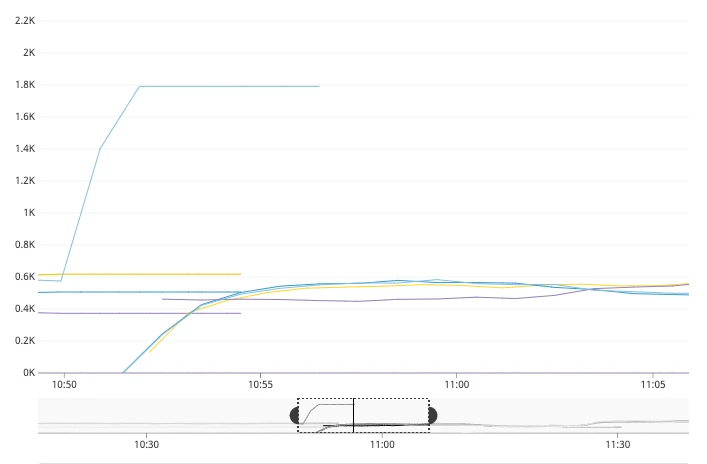

But there was also something unexpected: request balancing. HTTP/2 maintains long-lived TCP connections, so it does not load balance naturally out-of-the-box. This is what we got when we tested it the first time (throughput distribution per pod of Scala gRPC server):

As you see, we had different throughput on each pod. Some pods are overloaded, while others are doing almost nothing. This is because PHP is always reusing existing underlying connections rather than creating new connections for each request.

Ideally, L7 load-balancing would be delegated to a service mesh, and our services would not have to be aware of their runtime environment. Istio and Linkerd are two popular service meshes that can be run on Kubernetes and provide L7 load-balancing as a core feature. At GIPHY, we’ve been keenly following the Istio project, and are actively working on its rollout. However, since we are currently tackling the last set of hurdles around minimizing tail latencies and connection exhaustion when communicating with some non-mesh services, we aren’t running all our production traffic through an Istio service mesh.

In an engineering organization, the development and rollout of projects can be incongruous. Rather than making a service mesh a prerequisite for rolling out gRPC, we decided to come up with direct solution to the load balancing issue. The solutions we describe here illustrate the considerations that need to be made in the absence of native L7 load-balancing.

Our Scala microservices are using k8s API for load balancing. Each Scala gRPC service subscribes to k8s state modification events. (For more about using the k8s API, see https://kubernetes.io/docs/reference/using-api/api-concepts/.)

So Scala gRPC ↔︎ Scala gRPC communication was not suffering from this issue. Throughput and CPU load per pod were pretty even, as we were keeping up-to-date information about what pods we can connect to.

Our goal was to achieve the same results with minimal changes in our Kubernetes cluster. So we started digging on the server-side of gRPC services.

It turned out, that NettyServerBuilder allows setting of maxConnectionAge property:

Sets a custom max connection age, connection lasting longer than which

will be gracefully terminated. An unreasonably small value might be

increased. A random jitter of +/-10% will be added to it.

Long.MAX_VALUE nano seconds or an unreasonably large value will

disable max connection age.

public NettyServerBuilder maxConnectionAge(long maxConnectionAge, TimeUnit timeUnit)

Setting this value to 10 minutes causes the server to close connections older than that. When the connections close, it causes PHP to reconnect and re-resolve the service name, thus obtaining up-to-date information about pods. After applying these changes, these were the results:

In practice, you need to find a value for maxConnectionAge that satisfies your use-case. Setting it too high will cause load-balancing issues for extended periods of time because the service will re-use the existing connections as long as possible and new pods will barely get any traffic. On the other hand, setting this value too low will cause frequent reconnections and will kill all the benefits of gRPC and HTTP/2.

In our case, 10 minutes for maxConnectionAge was a good trade-off. After service deployments, it took around 10 minutes to get an even load on all new pods.

Step 4: Adjustments

gRPC is flexible, especially when it comes to request settings. You are able to control request time using deadlines.

$options = [

'update_metadata' => function (array $metadata) use ($app) {

$metadata['grpc-timeout'] = [GRPC_DEADLINE];

return $metadata;

}

];

$client = new GifService($host, $options);

// or send it as metadata

$client->getGif(new GifRequest(), [], ['timeout' => GRPC_CDEADLINE]);

So the server is able to detect if the client has canceled request or timeout exceeded and stop execution:

2020-02-02 09:24:27,044 [grpc-default-executor-8479] WARN c.g.s.g.s.SlowCallDumperServerInterceptor$ - Canceled gRPC method WrappedArray(gif.proto.getGif origin=api took 100ms with params gif_id: "3o6gbbuLW76jkt8vIc")

Conclusions

So what was the result?

First of all, we improved application health: connection errors decreased dramatically when we rolled out the new integrations.

Generated code with additional type checks resulted in more strict types in app, which makes all our code safer. (At the time we were working on integrations, PHP didn’t have typed properties.)

We are able to integrate new services faster, as the team only has to learn the Interface definition language and focus only on business logic.

— Serhii Kushch, Senior Software Engineer

Serhii comes to us via Proxet