How GIPHY uses Fastly to Achieve Global Scale

April 20, 2021 by

GIPHY serves a lot of GIF media content. Over 10 billion pieces of content per day, in fact. In addition to media requests, which represent the actual download of GIFs, we also provide public API and SDK services for developers to use in their products, which gives their users access to our enormous library.

As with many tech companies with large daily traffic volume, we have scalability challenges. Our systems have to be able to handle a high volume of requests (in the 100K requests per second range) and have a low latency response. There’s nothing worse than waiting for something to load — especially a GIF!

This is where an edge cloud platform plays a role: instead of making our AWS servers handle every request that comes our way, an edge cloud platform caches as much of the media content and search result JSON payload as possible. This works well because neither media content, nor API responses change often. The edge cloud platform servers also distribute the request load among various regions. We use Fastly as our edge cloud platform provider to help us serve billions of pieces of content to our users.

Fastly Solution

Fastly provides a variety of features that allow us to deliver content at scale. These features can be broadly categorized as:

– Cache Layering

– Cache Management

– Edge Computing

Cache Layering

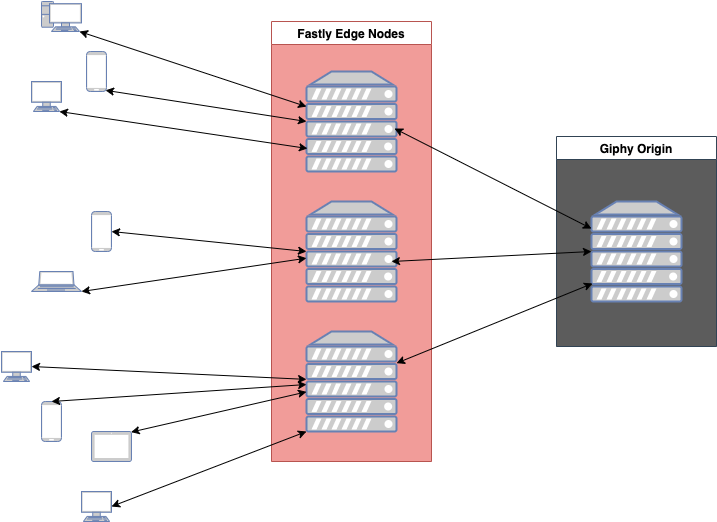

A basic edge cloud platform set up has the content cached at the edge. These server nodes are distributed globally and deliver the cached content to users making requests in their region. In the event the edge node does not have the content, it will make a request to our origin server to retrieve it.

This single layer setup has a drawback. Each edge node maintains its own cache based on the requests from its region. So a new piece of content may not be cached on any of the edge node which could lead to surges in traffic to our origin servers as each edge node repeats a request for the same content. Viral content often exhibits this behavior as its popularity gains traction.

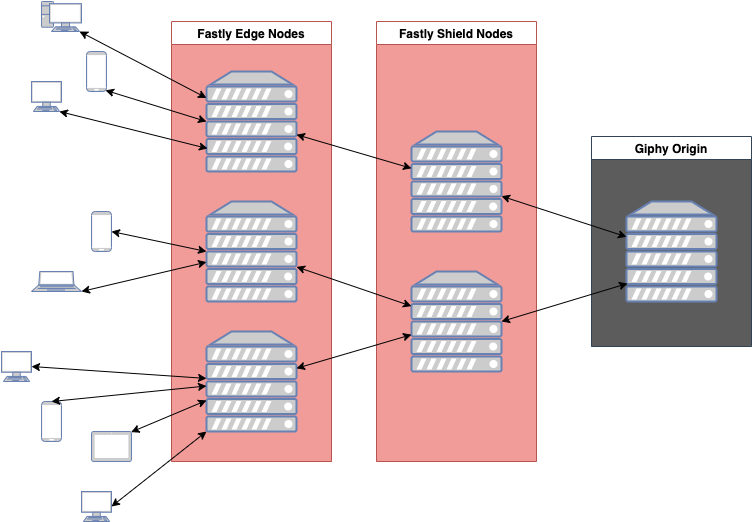

Fastly offers a second layer of cache service called the Origin Shield. Edge nodes that do not have the requested content in cache can now retrieve it from the Origin Shield layer with the request only needing to reach our origin server if the Origin Shield does not have it.

Cache Management

Now that the content is cached on the edge and Origin Shield, we need ways to manage their caching policies. Not all content should stay cached for the same duration, or TTL (Time to Live). For example, the information on an individual GIF will not change that much, so its API response can be cached over a relatively long period of time. On the other hand, the API response for the Trending Endpoint, which returns a continuously updated list of currently trending GIFs, would need to be on a short TTL due to the nature of trends.

Fastly is powered by Varnish, so all of the configurations are executed as Varnish Configuration Language (VCL) code. Both the edge and Origin Shield runs VCL code, so we are able to set up various cache TTLs based on API endpoint paths with some simple VCL code:

# in vcl_fetch

if (req.url ~ "^/v1/gifs/trending") {

# set 5 minute ttl for trending responses

set beresp.ttl = 600s;

return(deliver);

}

The cache TTL does not always have to be set by VCL code. API requests that reach origin, when a cacheable item is missing or stale, can have cache control instructions encoded in their responses from Origin. We just need to simply setup the VCL code so that it can be overridden. From origin, we can propagate this decision to Fastly’s Origin Shield and edge nodes by setting cache control headers in the API response. Specifically, the Surrogate-Control header because this header will only be for Fastly nodes. So, we can update the above VCL to prioritize the Surrogate-Control over the endpoint cache policies like this:

# in vcl_fetch

if (beresp.http.Surrogate-Control ~ "max-age" || beresp.http.Cache-Control ~ "(s-maxage|max-age)"

) {

# upstream set some cache control headers, so Fastly will use its cache TTL

return(deliver);

} else {

# no cache headers, so use cache policies for endpoints

if (req.url ~ "^/v1/gifs/trending") {

# set 10 minute ttl for trending responses

set beresp.ttl = 600s;

return(deliver);

}

}

With this setup, we can have cached content invalidate themselves with dynamic TTL policies that meet our needs — but we also need to invalidate cache explicitly if we don’t want to wait for them to naturally expire. We could simply invalidate the cache by the cache key (URL). This works well for media, but it is a bit more complicated for API responses.

For example, our API search endpoint can return the same GIF for different queries, but it isn’t feasible for us to know every possible URL that yielded that GIF if we wanted to invalidate it:

# same GIF can appear in the response of all of these API calls https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=haha https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=hehe https://api.giphy.com/v1/gifs/search?api_key=__KEY2__&q=lol https://api.giphy.com/v1/gifs/search?api_key=__KEY3__&q=laugh

For this situation, we take advantage of Fastly’s Surrogate Keys! As the name suggests, a surrogate key can uniquely identify cached content, in much the same way the cache key does. Unlike the cache key, there can be multiple surrogate keys per stored result, and we can set the surrogate keys. Using the GIF IDs that appear in each API response gives us a way to identify multiple pieces of cached content that contain a given GIF:

# same GIF (gif_id_abc) can appear in the response of all of these API calls https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=haha Assign Surrogate Key: gif_id_abc https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=hehe Assign Surrogate Key: gif_id_abc https://api.giphy.com/v1/gifs/search?api_key=__KEY2__&q=lol Assign Surrogate Key: gif_id_abc https://api.giphy.com/v1/gifs/search?api_key=__KEY3__&q=laugh Assign Surrogate Key: gif_id_abc

We can even attach multiple surrogate keys to the same content:

https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=haha Assign Surrogate Key: gif_id_abc gif_id_def key_KEY1 q_haha https://api.giphy.com/v1/gifs/search?api_key=__KEY1__&q=hehe Assign Surrogate Key: gif_id_abc gif_id_123 key_KEY1 q_hehe https://api.giphy.com/v1/gifs/search?api_key=__KEY2__q=lol Assign Surrogate Key: gif_id_abc, gif_id_321 gif_id_456 key_KEY2 q_lol https://api.giphy.com/v1/gifs/search?api_key=__KEY3__&q=laugh Assign Surrogate Key: gif_id_abc key_KEY3 q_laugh

Surrogate keys are a powerful feature that allows us to select the appropriate cache to invalidate with great precision and simplicity. With this setup, we are able to invalidate cache for situations such as:

– Invalidate all cached API responses that contain a specific GIF

– Invalidate all cached API responses that are for a specific API key

– Invalidate all cached API responses that queried for certain words

Running Code At The Edge

VCL provides a lot of versatility in what we can do in the edge cloud platform configuration. We showed before how the configuration can set various cache TTL policies for the edge and Origin Shield nodes, but we can also use VCL to set the request information.

We can have code to rewrite the incoming request URL. This comes in handy when we need to make changes to our API endpoints without troubling our consumers to update their calls.

# in vcl_recv

if (req.url ~ “^/some-old-endpoint”) {

# rewrite to the new endpoint

set req.url = regsub(req.url, “/some-old-endpoint”, “/new-and-improved-endpoint”);

}

We can even select a percentage of the incoming requests to test experimental features. By using Fastly’s randomness library we can add a special header to some of our requests that enables new behaviour in our origin server.

# in vcl_recv

set req.http.new_feature = 0

if (randombool(1,10000)) {

# .01% of the traffic gets to see the new feature

set req.http.new_feature = 1;

}

This combined with Fastly’s edge dictionaries allow us to set up different behaviors with minimal code.

# API keys that will have a percentage of their request use the new feature

table new_feature_access {

“__API_KEY1__”: “1”,

“__API_KEY2__”: “5”,

“__API_KEY3__”: “1000”,

}

sub vcl_recv {

set req.http.new_feature = 0

# check if request has an api key that is setup to have a percentage of its requests use the new feature

if (randombool(std.atoi(table.lookup(new_feature_access, subfield(req.url.qs, "api_key", "&"), "0"))

,10000)) {

set req.http.new_feature = 1;

}

return(lookup);

}

This is just scratching the surface of what VCL enables. Fastly’s documentation can be found here if you want to see what else is possible!

Tips and Tricks

We use a lot of Fastly features to power the world with animated GIF content. However configuring the edge cloud platform can be quite complex when there is so much functionality at your disposal, so here are some tips and tricks we recommend to help you along the way.

VCL Execution In Edge and Origin Shield

With a two layer cache setup, one key thing to remember is the same VCL code will execute on both the edge and Origin Shield. This can cause unexpected outcomes if the VCL code is changing request/response state information.

For example, our VCL code from before would set our cache TTL, based on cache control headers from upstream or specified in the VCL code itself, for both the Origin Shield and edge nodes:

# in vcl_fetch

if (beresp.http.Surrogate-Control ~ "max-age" || beresp.http.Cache-Control ~ "(s-maxage|max-age)"

) {

# upstream set some cache control headers, so Fastly will use its cache TTL

return(deliver);

} else {

# no cache headers, so use cache policies for endpoints

if (req.url ~ "^/v1/gifs/trending") {

# set 10 minute ttl for trending responses

set beresp.ttl = 600s;

return(deliver);

}

}

Suppose for the trending endpoint, we also set the response’s Cache-Control header so we can instruct the caller on how long to cache the content on their side. This could simply be done as:

# in vcl_fetch

if (beresp.http.Surrogate-Control ~ "max-age" || beresp.http.Cache-Control ~ "(s-maxage|max-age)"

) {

# upstream set some cache control headers, so Fastly will use its cache TTL

return(deliver);

} else {

# no cache headers, so use cache policies for endpoints

if (req.url ~ "^/v1/gifs/trending") {

# set 10 minute ttl for trending responses

set beresp.ttl = 600s;

# set 30 second ttl for callers

set beresp.http.cache-control = "max-age=30";

return(deliver);

}

}

The Origin Shield would have executed this VCL code and added the Cache-Control header to the response’s header and returned it to the edge. On the edge however, it would have seen that the Cache-Control is set in the response and would have executed the if-statement. This would have resulted in the edge nodes using a cache TTL of 30 seconds instead of the intended 10 minutes!

Fortunately, Fastly provides a way of distinguishing between the edge and Origin Shield by setting a header (Fastly-FF) in the request:

# in vcl_fetch

if (req.url ~ "^/v1/gifs/trending") {

# set 10 minute ttl for trending responses

set beresp.ttl = 600s;

return(deliver);

}

# in vcl_deliver

if (!req.http.Fastly-FF) {

# set 30 second ttl for callers

set resp.http.cache-control = "max-age=30";

}

With this addition, the Cache-Control header would only be set at the edge node and our cache policies are behaving as expected again!

Debugging and Testing

The pitfall we just mentioned can be quite difficult to detect and debug. The VCL code would just run on a server and present you with the response and response headers. We can simply add debugging information into custom headers and view them in the response, but this can get unwieldy quick.

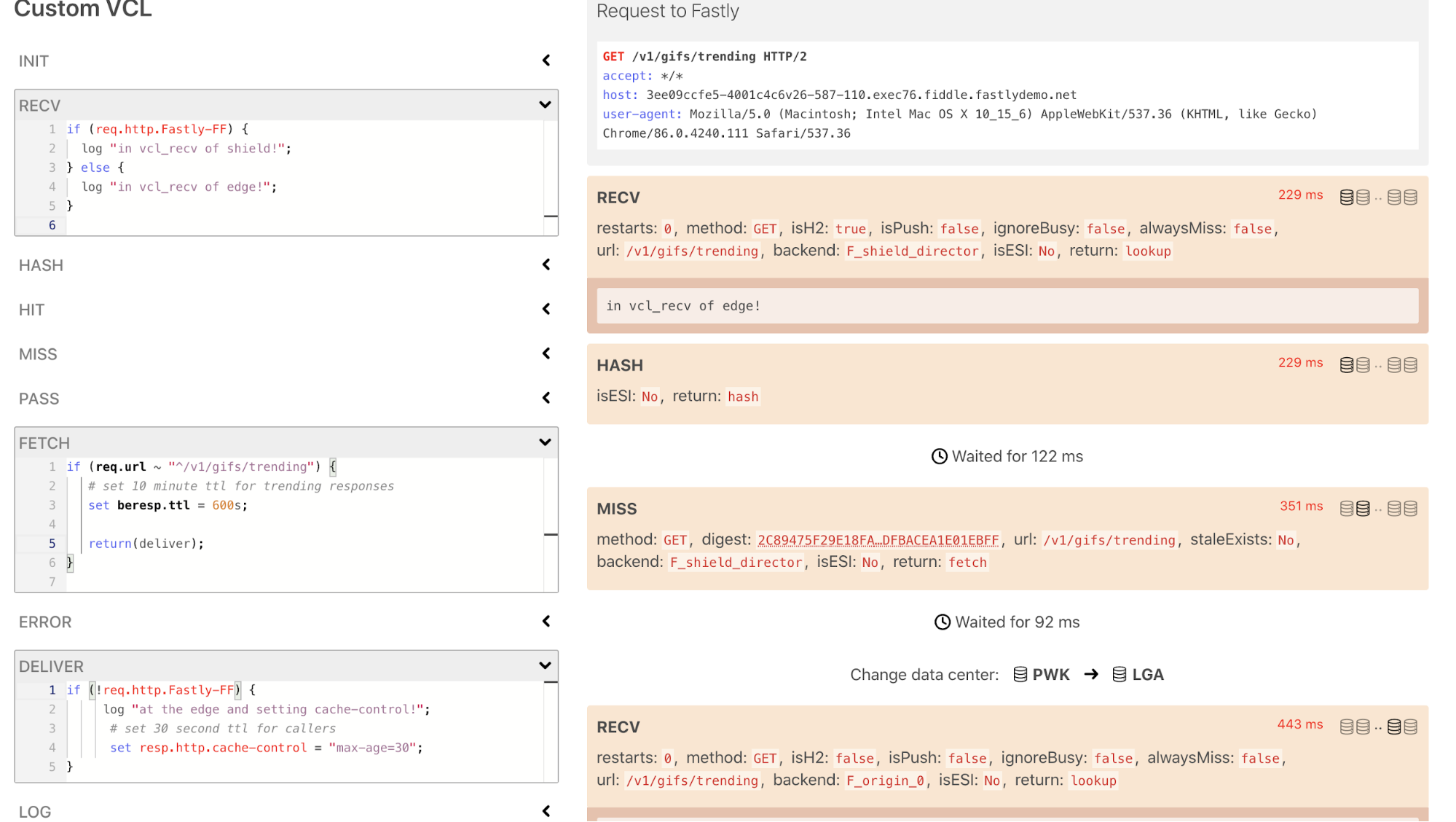

Fortunately, there is the Fastly Fiddle tool, which provides better visibility into what the VCL code does when it executes. We can simulate the various VCL code parts in this tool and get more information on how Fastly’s edge and Origin Shield servers will behave with the VCL code.

Here is the fiddle of the above example that shows the double execution of the VCL can affect the cache TTL.

We set up the VCL in the appropriate sections on the left, and execute it to see how Fastly would have handled the request on the right:

The picture above shows a lot of useful information about the life cycle of the request as it goes through the edge and Origin Shield node. In a real world setting, the VCL code can be very complex and this tool really shines in situations like this.

— Ethan Lu, Tech Lead, API Team